AI Ethics 101: What is Explainable AI?

XAI: The Next Frontier in Machine Learning

From facial recognition to autonomous manufacturing to targeted social media advertising, Artificial Intelligence is here to stay. As AI becomes the norm across a range of industries, and even those previously considered low-tech come to rely on AI systems, explainability becomes more and more important.

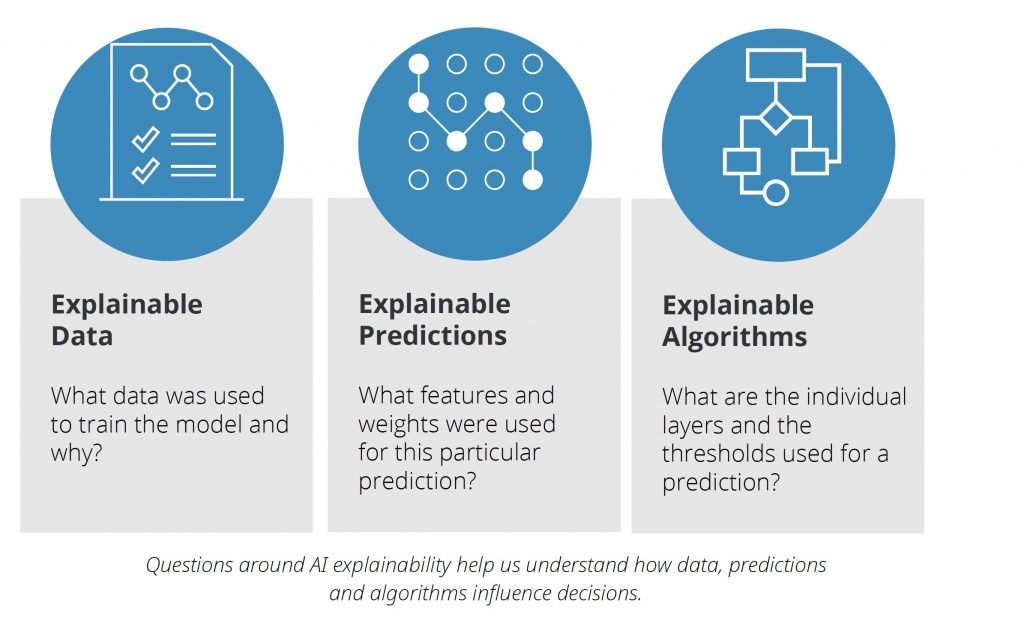

However, as explainability and transparency increased in importance, machine learning models have become increasingly complex and harder to explain. This creates a black box effect, where the inputs and outputs of AI models are known but the rest of the process is opaque to the humans who use or are affected by these systems. This is especially salient when using Deep Learning models, where the system becomes smarter (and often more complex) as it’s exposed to more data. The least accessible details of a model - how and why some output comes to be - are what most people want to know.

Alongside the curiosity about what is really happening within a machine learning or deep learning model, there are security and ethical concerns surrounding AI technology. We know these systems can be very powerful, but figuring out the limitations or inner workings of each model is increasingly important to answer questions about whether or not we can trust the outputs of a given model. A lack of explainability hampers our ability to trust AI systems.

Achieving Transparency with Explainable AI (XAI)

To address the growing concerns regarding the ethics and transparency of machine learning algorithms, government agencies and organizations have started pursuing Explainable AI initiatives. The EU’s General Data Protection Regulation introduced a right to explanation clause in 2018, while the Defense Advanced Research Project Agency (DARPA) has also launched programs to research and produce explainable AI solutions.

Goals of XAI

Produce explainable models that allow inspection of decisions made by the AI or ML systems

Give human users the ability bound AI driven tasks and decisions

Provide human users with a rationale for outputs, so that we can understand and trust the system

Check out this article for an overview of all the important concepts in AI Ethics.

Business Implications of Explainable AI

This is all great, but if you’re using AI and machine learning in a business context, how does this apply to you?

With automated graphs, dashboards, data analytics, and large scale machine learning models becoming a part of high stakes decision making, smart and critical business executives or data scientist often ask questions like:

Why was this prediction made?

What are the factors that contributed to this conclusion?

How certain is the model in its output?

These questions are exactly what XAI aims to answer.

Experts with Experience in XAI

AI technology is advancing faster than ever – inference, cognitive computing, data science, machine learning, algorithms… You might find yourself looking at your data and wondering where to even start.

Let us tackle your complex data problems and develop explainable systems. If you would rather learn to handle your own data we offer hands-on workshops and training.

Further Reading

AI Ethics 101: Important Concepts

The Alignment Problem: Machine Learning and Human Values

AI Ethics

Oxford Handbook of Ethics of AI

The Ethics of AI and Robotics: A Buddhist Viewpoint