BERT: How to Handle Long Documents

The Problem with BERT

BERT, or Bidirectional Encoder Representations from Transformers, is currently one of the most famous pre-trained language models available to the public. It’s proved incredibly useful at a diverse array of tasks, including Q&A and classification.

However, BERT can only take input sequences up to 512 tokens in length. This is quite a large limitation, since many common document types are much longer than 512 words. In this point we’ll explain and compare a few methods to combat this limitation and make it easier for you to use BERT with longer input documents.

Why can’t BERT handle long documents?

BERT inherits it’s architecture from the transformers, which themselves use self-attention, feed-forward layers, residual connections, and layer normalization as their foundational components. If you’re not familiar with transformer architecture, you might read Deep Learning 101: What is a Transformer and Why Should I Care? before continuing with this article.

The issues with BERT and long input documents stem from a few areas of BERT’s architecture.

Transformers in themselves are autoregressive, and BERT’s creators noted a significant decrease in performance when using documents longer than 512 tokens. So, this limit was put to guard against low quality output.

The space complexity of the self-attention model is O(n²). Quadratic complexity like this makes the modes very resource heavy to fine-tune. The longer the input the more resources you will need to fine-tune the model. The quadratic complexity makes this prohibitively expensive for most users.

Given the above two points, BERT is pre-trained with positional encodings which are based on shorter input sequences. This means the model won’t generalize well to longer sequences, and the expense of fine-tuning for diminishing returns means there are limited ways around this issue.

But I have long documents, so what do I do now?

Fortunately, there are some things you can do to use BERT effectively with longer input documents. Here are some proven techniques to try.

Trimming the Input Sequence

This is possibly the most common technique when working with BERT and long input documents. Given that BERT performs well with documents up to 512 tokens, merely splitting a longer document into 512 token chunks will allow you to pass your long document in pieces.

For longer continuous documents - like a long news article or research paper - chopping the full length document into 512 word blocks won’t cause any problems because the document itself is well organized and focused on a single topic. However, if you have a less continuous block of text - like a chatbot transcript or series of Tweets - it may have chunks in the middle that are not relevant to the core topic.

Averaging Segment Outputs’ votes

Another common technique is the divide a long document into overlapping segments of equal length, and using a voting mechanism for classification. This will alleviate the issues that come with having a non-continuous document like a conversation transcript. Using votes from various blocks of the larger document will incorporate information from the entire thing.

The way this works in practice is to divide the document into segments and run each segment through BERT, to get the classification logits. Then by combining the votings (one per segment), we can get an average that we take as the final classification.

The downside here is that you can’t fine tune BERT over the task since the loss is not differentiable. You also miss some shared information between each segment, even though there is overlap. This can have downstream implications that are specific to the architecture of your analysis pipeline.

Conclusion

Using BERT with long input documents depends on your specific task. There are newer models - like RoBERTa - that were created to address the weaknesses of BERT. We will talk more about those in a future post. For complicated tasks that require information from the entire document, or where you’re using a non-continuous document, using a BERT variant like RoBERTa may be the optimal solution.

For a deeper dive into the mechanics behind transformers, check out other posts from our Deep Learning 101 series.

What is a Transformer and Why Should I Care?

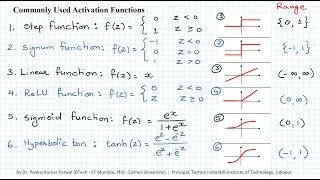

Transformer Activation Functions Explainer

For a guide through the different NLP applications using code and examples, check out these recommended titles:

Want a deep dive into this or related topics, need in depth answers or walkthroughs of how to implement an NLP pipeline using BERT? Send us an email to inquire about hands on consulting or workshops!